Abstract

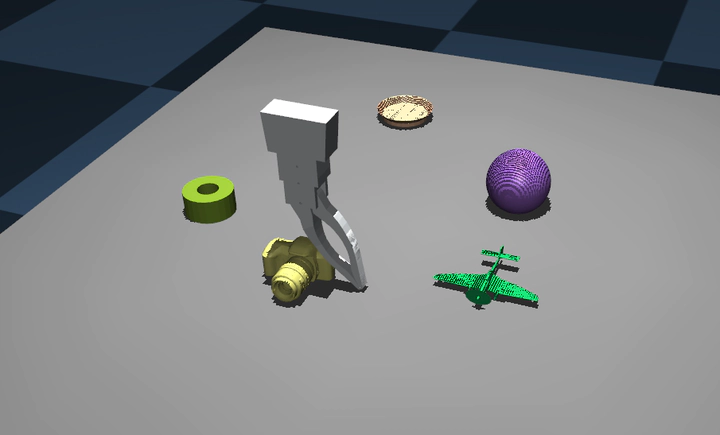

Recent work on robot learning with visual observations has shown great success in solving many manipulation tasks. While visual observations contain rich information about the environment and the robot, they can be unreliable in the presence of visual noise or occlusions. In these cases, we can leverage tactile observations generated by the interaction between the robot and the environment. In this paper, we propose a framework for learning manipulation policies that fuse visual and tactile feedback. The control problems considered in this work are to localize a gripper with respect to the environment image and navigate to desired states. Our method uses a learned Bayes filter to estimate the state of a gripper by conditioning the tactile observations on the environment image. We use deep reinforcement learning for solving the localization and navigation problems provided with the belief of the gripper’s state and the environment image. We compare our method against two baselines where the agent uses tactile observation directly with a recurrent neural network or uses a point estimate of the state instead of the full belief state. We also transfer the policies to the real world and validate them on a physical robot.