Abstract

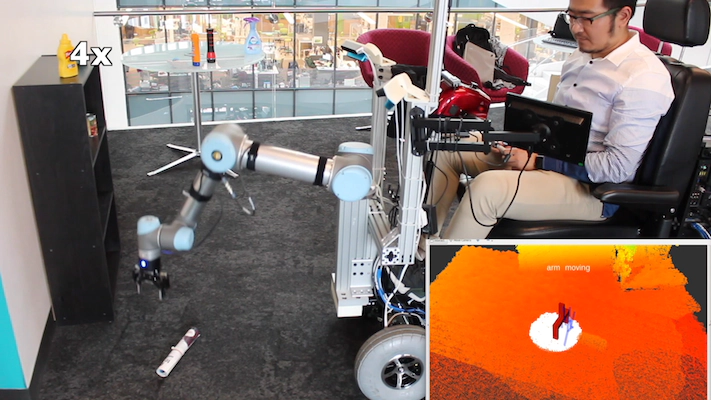

Assistive robot manipulators must be able to autonomously pick and place a wide range of novel objects to be truly useful. However, current assistive robots lack this capability. Additionally, assistive systems need to have an interface that is easy to learn, to use, and to understand. This paper takes a step forward in this direction. We present a robot system comprised of a robotic arm and a mobility scooter that provides both pick-and-drop and pick-and-place functionality for open world environments without modeling the objects or environment. The system uses a laser pointer to directly select an object in the world, with feedback to the user via projecting an interface into the world. Our evaluation over several experimental scenarios shows a significant improvement in both runtime and grasp success rate relative to a baseline from the literature, and furthermore demonstrates accurate pick and place capabilities for tabletop scenarios.